From Pixels to Personas: The Definitive Evolution of Video Face Swap Technology

The rapid development of video face swap technology has changed from a small number of academic experiments to one of the most influential digital media phenomena in the twenty-first Century. At first, complex computer vision research was carried out in high-end laboratories. Now anyone with a smartphone can use it, so as to achieve seamless identity replacement in a few seconds. This evolution is not just entertainment; It represents the paradigm change of how we perceive digital reality, visual effects and personal identity in the generation of AI era.

The Genesis of Digital Identity: From Manual CGI to Early Academic Foundations

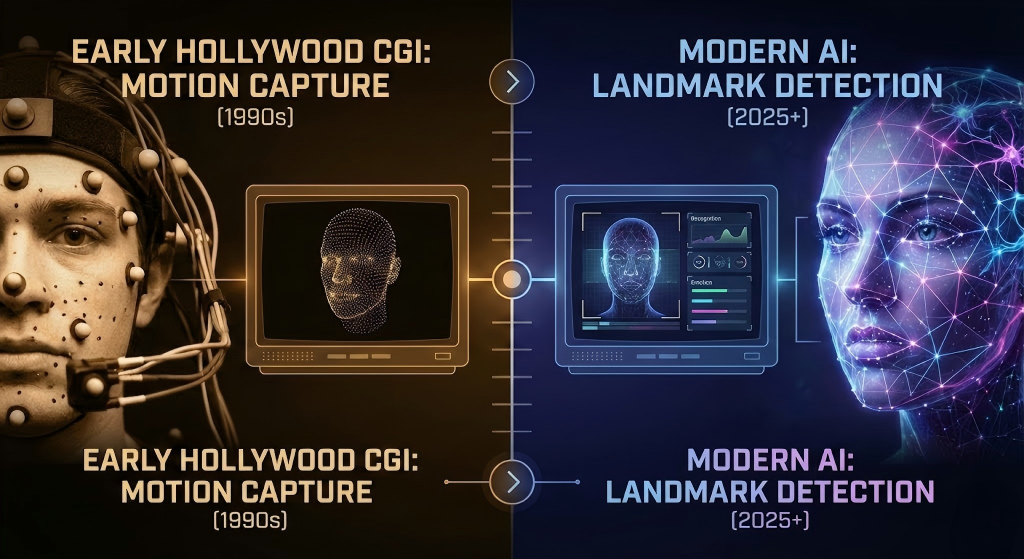

The journey of video face swap technology did not begin with AI, but began in the hard studio of Hollywood and the theoretical framework of computer science at the beginning of the twenty-first Century. Before the era of deep learning, face swap in videos required manual masking, 3D tracking and high budget motion capture systems. Early milestones in the film industry, such as the digital restoration of actors in movies such as Gladiator or the de aging of characters in Benjamin Barton, using traditional computer generated images (CGI) to achieve what we now think of as "face replacement.". However, these methods are too expensive, requiring months of labor from professional visual effects artists. At the same time, academic researchers are laying the foundation for automated processes. In the middle of the 2,000s, papers on "active appearance model" and "constrained local model" began to explore how computers recognize human facial symbols - eyes, nose and mouth - and map them to 3D grids.

In this era of "pre AI", the main challenge is "terror Valley". When trying to change photos or videos, lights, shadows and subtle micro expressions often make people feel "off", which causes the audience's uneasiness. The transition from still image to smooth video adds complexity, such as time consistency - ensuring that the exchanged face does not "flicker" or move unnaturally between frames. Until the integration of large-scale datasets (such as CelebrA) and the exponential growth of GPU processing capacity, the industry is moving towards the direction of automated synthesis that we see today. Platforms like faceswap-ai.io now stand on the shoulders of these pioneers, providing tools to deal with these complexities in the cloud, eliminating the need for millions of dollars of budget that large film studios used to need.

The GAN Revolution: How Deep Learning Democratized the Deepfake

The real explosion of video face swap technology occurred in 2014, when Ian Goodfellow and his colleagues invented GANs. This mathematical breakthrough introduces a two model system: a "generator" to create an image and a "discriminator" to try to detect whether an image is fake. This continuous internal competition enables AI to generate surreal faces, and even deceive human eyes. In 2017, the technology escaped from the laboratory and became mainstream through a Reddit user named "Deepfakes". The user released an open-source framework that allows amateurs to use consumer grade hardware for GIF face swap and video conversion. This period marks the era of democratization. The core algorithm of face synthesis was disclosed for the first time. The process usually follows a specific process: Goodfellow and his colleagues. This mathematical breakthrough introduced a dual-model system: a "Generator" that creates the image and a "Discriminator" that tries to detect if it is fake. This constant internal competition allowed AI to produce hyper-realistic faces that could fool even the human eye. By 2017, this technology escaped the laboratory and hit the mainstream via a Reddit user named "Deepfakes," who released an open-source framework that allowed hobbyists to perform gif face swap and video transitions using consumer-grade hardware.

This period marked the "Democratization Era." For the first time, the core algorithms for face synthesis were public. The process generally followed a specific pipeline:

- Extraction: The AI identifies and crops faces from thousands of frames of the source and target videos.

- Training: An encoder-decoder model learns the unique features of both faces (eye shape, jawline, skin texture).

- Conversion: The model "reconstructs" Face A using the expressions and movements of Face B. In this era, there are also the rise of special tools such as watermark remover and background remover. These tools are crucial to clean up the artifacts left by the early AI model. With the maturity of technology, the community has changed from low resolution 64x64 pixel exchange to high-definition output. The complexity of the loss function - the mathematics that tells AI how "wrong" it is - becomes more complex, combining "structural similarity" and "perception loss" to ensure that the color of the face after exchange perfectly matches the lighting environment of the original video.

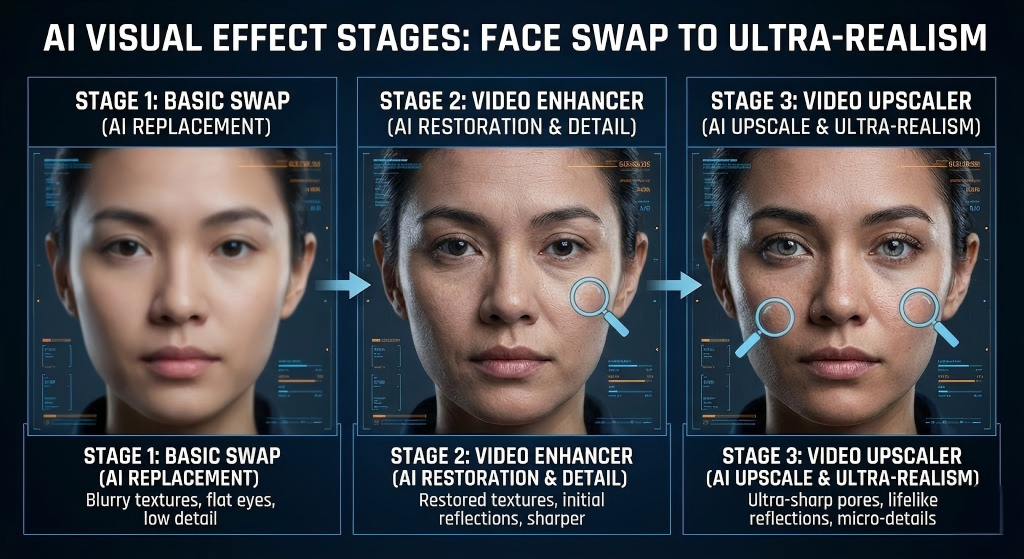

Refinement and High-Fidelity: The Integration of Post-Processing Tools

As we entered the early 2,020s, the focus of video face swap changed from simple "possibility" to "perfect". The industry is aware that although the original AI exchange is impressive, it often lacks the clarity of professional cinematography. This leads to the integration of advanced post-processing AI, such as video intensifier and image intensifier. These secondary models are designed to repair the blur that often occurs in the process of facial distortion. By using the video upgrader, users can now exchange 720p and convert it to a 4K masterpiece, recovering the fine details such as single pores and eyelashes lost in the initial synthesis process.

Modern platforms including faceswap-ai.io simplify the whole ecosystem into a workflow. Now users do not need high-end NVIDIA GPU and Python knowledge, but can access nano banana pro and other tools for fast and high-quality rendering. We also saw the rise of professional niche tools, such as:

- Face expression changer: To tweak the mood of the swapped face after the fact.

- Video background remover: To isolate the subject before performing a swap, ensuring no "ghosting" occurs around the hair or ears.

- Video character replacement: A more holistic approach where the AI doesn't just swap the face, but adjusts the body language and hair to match the new identity.

The technological leap here is the transition from "Swap-only" to "Context-aware" AI. Today’s models don’t just paste a face; they understand the geometry of the head in 360 degrees. This allows for extreme angles and occlusions—like a hand passing in front of a face—which used to break the illusion of early deepfakes. This level of fidelity has opened doors for high-end marketing, personalized advertising, and even the "digital resurrection" of historical figures for educational purposes.

The Future of Synthesis: Multimodal Integration and Ethics

We are now entering the most advanced stage of the evolution of video face swap: multimodal AI. At this stage, visual exchange is no longer isolated; It combines with voice clone and lip synchronization technology to create a fully realized digital twin. This means that if you perform video face swap, AI can also generate a synthetic version of the target voice, and perfectly synchronize the mouth action with the new script. This integration is the Holy Grail of content creation, which allows localization of films in which actors seem to be native languages, or allows creators to produce high-quality content without going to the camera.

However, with the emergence of this power, the moral and regulatory pattern has changed significantly. With the disappearance of entry barriers, the difference between real media and synthetic media has become blurred. The industry is responding through "digital watermark" and "blockchain verification" to ensure transparency. Tools that used to be simple facial exchange applications are evolving into a comprehensive creative package, giving priority to user safety and consent. Looking forward to the future, the next frontier is real-time generation synthesis. Imagine that in a world, you can use facial expression changers or augmented reality glasses in real-time video calls to replace video characters of people you meet in real life.

The future of faceswap-ai.io and similar innovators is to make these "superpowers" accessible, while maintaining high quality standards. Whether it's a simple photo for an expression pack or a complex video role for a feature film, this technology is moving towards the future where "digital" and "physical" are no longer separate categories. We are moving towards a "post reality" era. In this era, the only limitation of visual narrative is the imagination of creators, and it is supported by a set of AI tools, which can handle all things from the initial exchange to the final video intensifier embellishment.