The Digital Renaissance of Identity: Tracing the Evolution of Video Face Swap Technology

Modern video face swap technology fundamentally redefines the relationship between us and digital media, from a niche CGI technology to a complex AI driven powerful force. Whether you are a content creator who wants to make viral social media clips or a movie producer who pursues high-end visual effects, the ability to seamlessly change your face's identity in sport has become the cornerstone of a new creator's economy. The core of this revolution is faceswap-ai.io, which is a platform for the transition from complex high barrier coding to accessible high fidelity creativity. In this in-depth exploration, we will explore how the journey of facial manipulation is transferred from the Hollywood screen to the fingertips of each smartphone user, and the future of this revolutionary technology.

The Pre-AI Era: From Manual Masking to Early Digital Puppetry

Long before the word "deepfake" entered the public dictionary, the concept of photo face swap has been a unique labor-intensive process of elite visual effects studios. In the 1,990s and the early twenty-first Century, changing a face in a mobile camera required frame by frame rotation, manual masking and complex lighting adjustments, and a few seconds shot might take hundreds of hours. This era is defined by "digital puppet show", and the performances of actors are meticulously mapped to the 3D wireframe. Classic examples include aging of characters or post death appearances of actors in major franchises. In this period, the technology was subtractive and additive rather than generative; It depends on the existing texture and artificial art intervention. There is no "wisdom" behind this process, only the skills of typesetters. However, these early milestones laid the foundation for understanding the importance of facial geometry and time consistency, which later became the final challenge of video face swap algorithm. Without these early 3D face mapping and light wrapping technology experiments, today's neural network would not have a structural basis for learning.

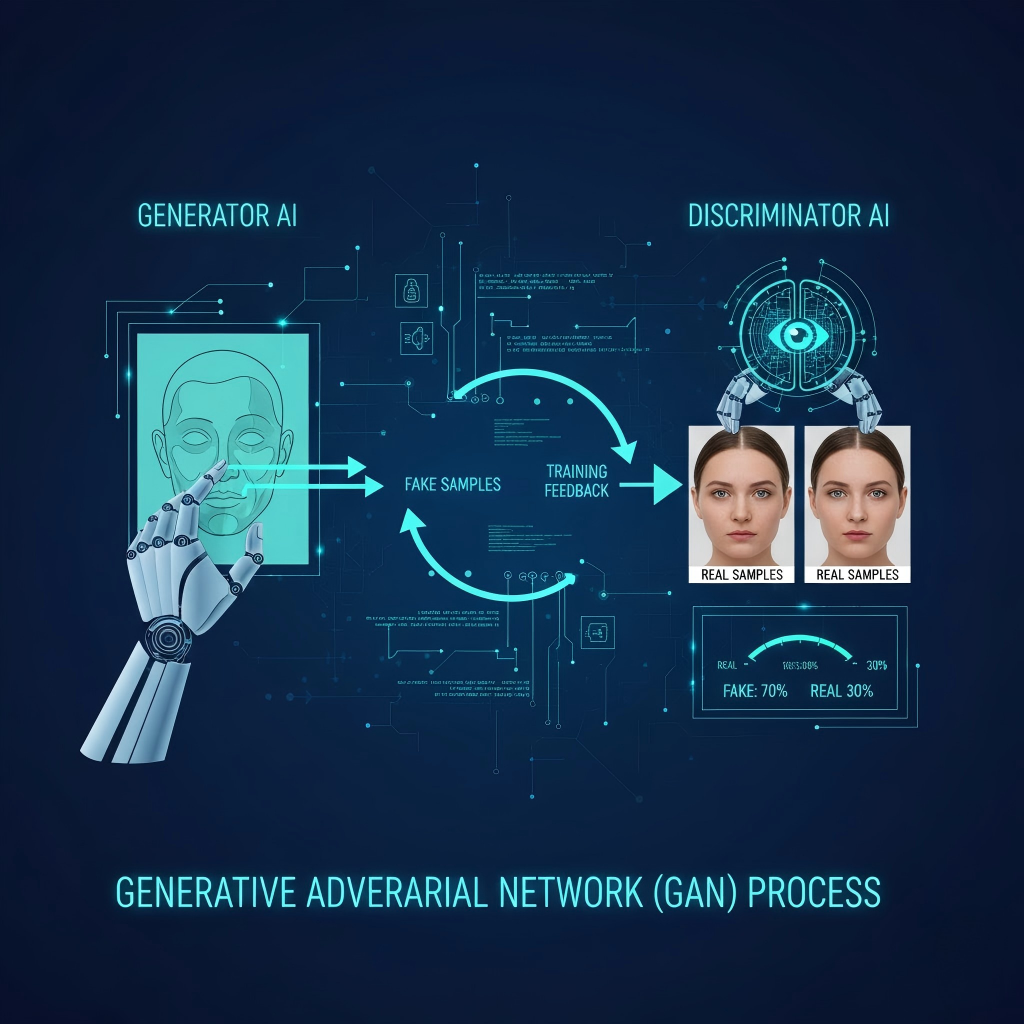

The GAN Revolution: How Generative Adversarial Networks Changed the Game

The real paradigm change occurred in 2014, when Ian Goodfellow introduced GANs. This "antagonistic" method - let the generator fight against the discriminator - enables AI to learn the real face. Around 2,017, driven by these deep learning models, the first viral example of video face swap began to emerge. In this era, there are tools that can analyze source faces and target videos, and then "Reconstruct" faces frame by frame with higher and higher accuracy. With the maturity of technology, developers began to integrate image enhancer and other functions to solve the fuzzy or low resolution output problems that plagued the early deepfakes. The introduction of StyleGAN and InsightFace further improved the process, allowing "one-time" exchange. AI only needs a clear photo to generate a credible video replacement. This is a huge leap compared with the era when thousands of images are needed to "train" specific models. Now, platforms like faceswap-ai.io use these advanced architectures to provide users with almost instant results, and mix skin color and shadow with a precision that was once thought impossible outside the supercomputer laboratory.

Real-Time Synthesis and the Convergence of Multi-Modal AI

As we entered the early 2,020s, the focus shifted from simple identity substitution to the overall integration of human expression. Modern video face changing is no longer just about eyes and nose; It involves the complex integration of facial expression changer and advanced lip synchronization technology. This ensures that the exchanged face not only looks like the person, but also moves and reacts with the same emotional weight as the original performer. We have entered the era of "multi-mode" generation, in which high-resolution video can be edited in real time. The emergence of diffusion model supports this evolution, which provides greater stability and details than the traditional GAN. In addition, the modern creators kit has been expanded to include a video upscaler to ensure that the final output conforms to the 4K standard, as well as a watermark remover, which is used to clean up assets in the production process. The result is a seamless digital avatar, which can be used for everything from localized marketing activities (actors' faces and languages are exchanged to different regions) to personalized game avatars that reflect the real appearance of players in HD glory.

2025 and Beyond: The Era of Intelligent Content Creation

Looking to the future, the boundary between "real" and "synthetic" is becoming almost invisible. In 2025, the field of video face swap will be defined by automation and moral transparency. New models like nano-banna Pro are breaking through the limits of consumer hardware, allowing complex 3D face replacement in seconds. In addition to exchanging faces, we also saw the rise of video background remover and video character replacement tools.

These tools not only allow creators to change characters, but also allow them to change the whole background of the scene. The integration of voice cloning technology means that digital avatars can now speak in any language, and have the subtle differences of the original voice, so as to create a real immersive experience. With the continuous innovation of faceswap-ai.io, the focus is turning to "intention based editing". Users can simply describe a change, and AI will execute it in all frames. The democratization of high-end visual effects means that an independent YouTube user now has the same production capacity as the Hollywood studio ten years ago. Although there are still challenges about consent and authenticity, the technological trajectory is clear: we are moving towards a world where the only limitation of visual narrative is the imagination of creators, not their technical expertise or budget.